Converse360 Agent Safeguarding

The examples below aim to illustrate some of the aspects of guardrails behind the scenes, the controls and barriers to avoid Agent LLM’s going off course. These can be interceptors from blocking to masking information that goes in from the end user through to what is generated by the LLM based on it’s understanding and the instructions into the model. Technically the platform itself hides this level detail making it simple, and easy to implement multi-agent and hybrid agent scenarios without technical or programming knowledge. In laymen’s terms. We take care of it for you, saving you a lot of time and effort, so apart from some aspects of testing and checks, there is already an industry recognised strong set of security and privacy principles applied to your new agent.

Safeguarding AI Systems from Misuse

The rapid development of AI has revolutionised many industries unlocking significant benefits but also presenting new risks. As AI systems become more advanced, the potential for misuse increases. From generating harmful content to inadvertently disclosing sensitive information, the challenges of responsibly deploying AI are numerous. To address these challenges, converse360 SaaS has introduced Guardrails, a feature designed to mitigate the risks associated with AI-generated content. This article explores the various types of guardrails available in Converse360 SaaS, including protections against prompt attacks, subject context consistency, PII exposure, competitor mentions, profanities, and hallucinations.

Understanding Converse360 SaaS and Guardrails

Assist-Me from converse360 is a comprehensive platform that allows developers to build and deploy generative AI applications. By providing access to various foundational models (FMs), Assist-Me serves as the back bone for creating customised AI solutions. Guardrails act as safety mechanisms, ensuring that AI outputs align with ethical guidelines, company policies, and legal requirements.

Guardrails are essentially rules that monitor user inputs and AI outputs, filtering or blocking content that is deemed unsafe or inappropriate. These safeguards are model-agnostic, meaning they can be applied across different foundational models, which is a necessity as the range of evolving new models emerge.

Protecting Against Prompt Attacks

One of the emerging threats in the AI landscape is the prompt attack, where malicious users craft inputs designed to bypass the intended use of the AI system. For example, a prompt attack might trick an AI model into generating harmful content, such as hate speech or misinformation. Guardrails in Assist-Me can be configured to detect and block such prompt attacks, ensuring that the AI system remains secure and produces outputs that are consistent with its intended purpose.

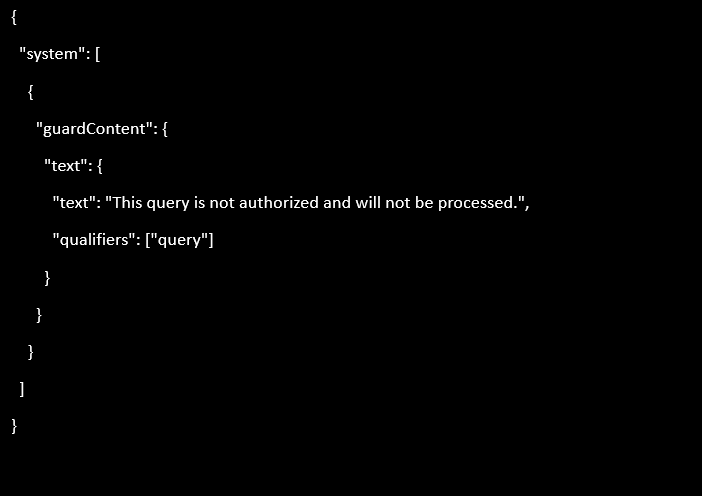

Here’s an example of how you might set up a guardrail to block unauthorised queries:

This guardrail checks the input against a list of authorised queries. If the input does not match the allowed queries, it is blocked, and the user is notified that their query is not authorised.

Ensuring Subject Context Consistency

AI models are capable of generating content across various subjects, but ensuring that the content remains consistent with the intended context is critical. Guardrails for subject context consistency help maintain this alignment by checking the content generated by the AI model against predefined guidelines.

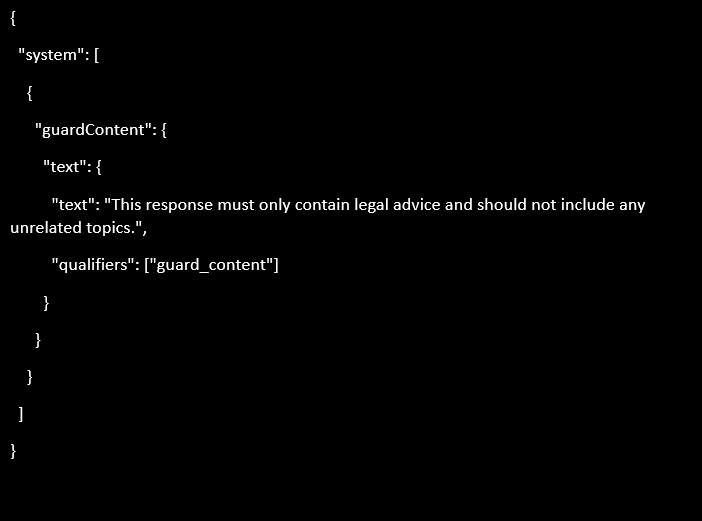

For example, if an AI system is designed to provide legal advice, a guardrail can ensure that the model does not stray into unrelated topics, such as medical advice. By enforcing consistency in the subject matter, guardrails help maintain the trustworthiness and reliability of AI-generated content.

Here’s a JSON example of how you might enforce subject consistency:

Protecting Personally Identifiable Information (PII)

The exposure of Personally Identifiable Information (PII) is one of the most significant risks in the digital age. PII includes data such as names, addresses, phone numbers, and other identifiers that can be used to trace an individual's identity. Inadvertent disclosure of PII through AI-generated content can lead to severe privacy breaches and legal ramifications.

Assist-Me's guardrails offer robust protections against PII exposure. These guardrails can scan the AI model's outputs for any signs of PII and either block the content or anonymise the sensitive information. For example, if a model output contains a credit card number or a passport number, the guardrail can detect this and take appropriate action, such as replacing the sensitive data with a placeholder or completely blocking the response.

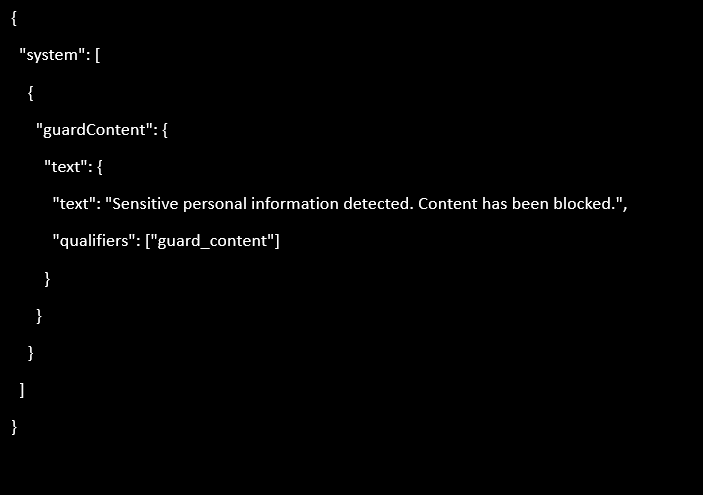

Here’s an example of a PII protection guardrail in JSON:

This guardrail is configured to detect and block any output containing PII, ensuring compliance with privacy regulations.

Blocking Competitor Mentions

In competitive industries, it's essential to ensure that AI-generated content does not inadvertently promote or reference competitors. Guardrails in converse360 SaaS can be configured to detect and block mentions of specific competitors, ensuring that the AI outputs align with the company's branding and marketing strategies.

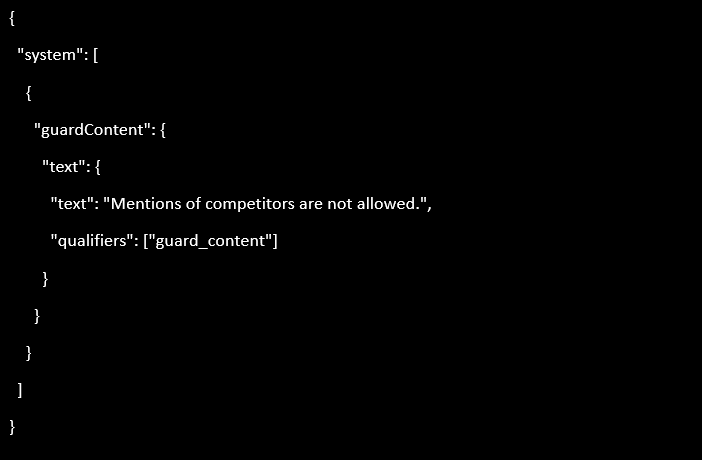

Here’s an example guardrail for blocking competitor mentions:

This guardrail scans the output for competitor names and blocks any content that contains such references, helping to maintain brand integrity.

Filtering Profanities and Offensive Language

Profanity and offensive language can severely damage brand reputation. To mitigate this risk, Assist-Me's guardrails include content filters specifically designed to detect and block profanities.

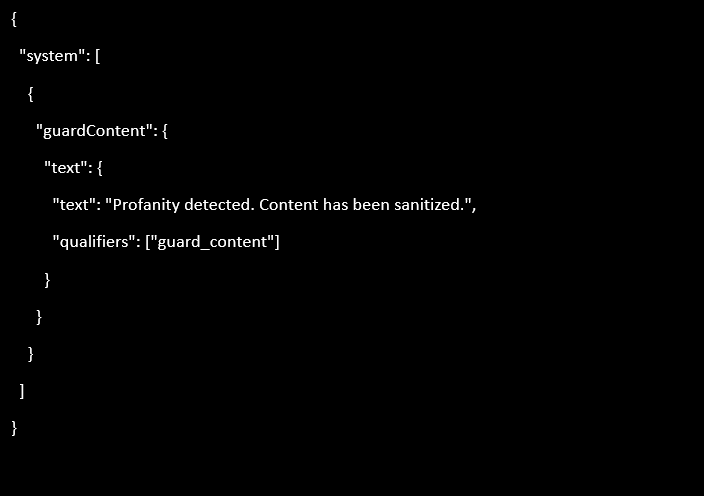

Here’s an example guardrail for filtering profanities:

These content filters can be configured with varying levels of sensitivity, from low to high. The filtering mechanism works by comparing the AI output against a list of predefined offensive words and phrases. If a match is found, the guardrail can either block the entire response or sanitise it by removing the offending language.

This guardrail ensures that AI outputs remain professional and appropriate for all audiences.

Preventing AI Hallucinations

AI hallucinations occur when a model generates content that is factually incorrect or not grounded in reality. This can be particularly dangerous in contexts where accuracy is critical, such as healthcare or legal advice. Guardrails in Assist-Me can help prevent hallucinations by ensuring that the AI model's outputs are consistent with verified information.

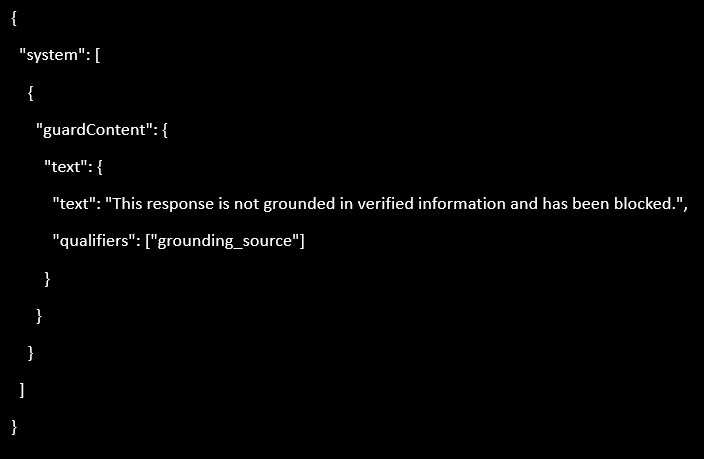

For example, a guardrail can be set up to require that all outputs be based on specific, trusted sources. If the model generates content that cannot be traced back to one of these sources, the output is blocked or flagged for review.

Here’s an example guardrail to prevent AI Hallucinations:

This guardrail ensures that the AI system only produces outputs that are based on reliable sources, reducing the risk of hallucinations.

Monitoring and Auditing AI Interactions

In addition to specific guardrails, Assist-Me offers tools for monitoring and auditing AI interactions. By integrating with monitoring tools, organisations can track and analyse user inputs and AI outputs, identifying any instances where guardrails were triggered. This provides valuable insights into how the AI system is being used and whether any adjustments are needed to the guardrails or the underlying models.

Auditing capabilities also allow organizations to maintain a record of AI interactions, which can be useful for compliance purposes. The ability to monitor and audit AI interactions helps ensure that AI systems operate within legal and ethical boundaries.

Conclusion: Guardrails as a Key Component of Responsible AI

Guardrails in Assist-Me represent a significant advancement in AI governance. By providing a flexible and powerful toolset for filtering and controlling AI-generated content, Assist-Me makes it easier for organisations to deploy AI systems responsibly. Whether it's protecting against prompt attacks, ensuring subject context consistency, safeguarding PII, blocking competitor mentions, filtering profanities, or preventing hallucinations, guardrails offer a comprehensive solution to the challenges of AI content moderation.

As AI continues to evolve, the importance of responsible deployment cannot be overstated. Guardrails in Assist-Me provide the necessary safeguards to ensure that AI systems are used ethically and effectively, delivering value to users while minimizing risks. As the technology matures, we can expect to see even more sophisticated guardrails and content controls, further enhancing the safety and reliability of AI applications.

More articles

Guardrail Guide – Protecting your audience, brand, reputation & ensuring legal compliance

Protection against prompt attacks, subject context consistency, PII exposure, competitor mentions, profanities and hallucinations...

Building Powerful RAG Agents: Vector Databases and LLM Caching

RAG agents combine the strengths of traditional information retrieval methods with the generative capabilities of LLMs...

Agent Assist: Empower Customer Service with Real-Time Guidance

As customer expectations rise, the need for effective tools to assist agents becomes crucial...